Our Products

Kernelize provides reference backends for compilers and runtimes that eliminate months of development delays.

Triton

The key enabling technology for day-0 support

Triton is the key enabling technology that makes day-0 support for new models possible. Kernelize actively contributes to the Triton language and compiler, using it to generate optimized kernels for new hardware targets, enabling vLLM and Ollama to run on new hardware alternatives.

Key Features:

- Language and compiler for parallel programming

- Python-based programming environment

- Generates optimized kernels for new hardware

- Kernelize actively contributes to development

Triton CPU

Triton backend that supports x86, ARM and RISC-V

Triton CPU is a Triton backend that generates optimized kernels for CPUs. Triton CPU serves as both as a Triton CPU backend and as a starting point for software developers to build their own backend.

Key Features:

- Triton backend that generates optimal kernels for CPUs

- Supportes existing Triton code

- Leverages existing Triton knowledge and tools

- Fork the open-source GitHub repo to save months on your Triton compiler development

TritonBench Dashboard

Monitor and analyze performance metrics for Triton operators

TritonBench Dashboard displays performance information about key Triton operators on the target hardware. Data is shown publicly for PyTorch releases and nightly data is available to TritonBench subscribers.

Key Features:

- Performance testing for key kernels

- Nightly data for developers

- Based on https://github.com/meta-pytorch/tritonbench

Nexus

Integrate Triton into your runtime for day-0 support of new models on the latest NPUs, CPUs and GPUs

Nexus integrates Triton kernels into an inference framework backend. It gathers hardware information and helps configure runtime frameworks to use the best kernels with no user configuration.

Key Features:

- Extends existing inference platform runtimes

- Optimizes layers on new target inference hardware

- Works with vLLM and Ollama

- Seamless integration with existing workflows

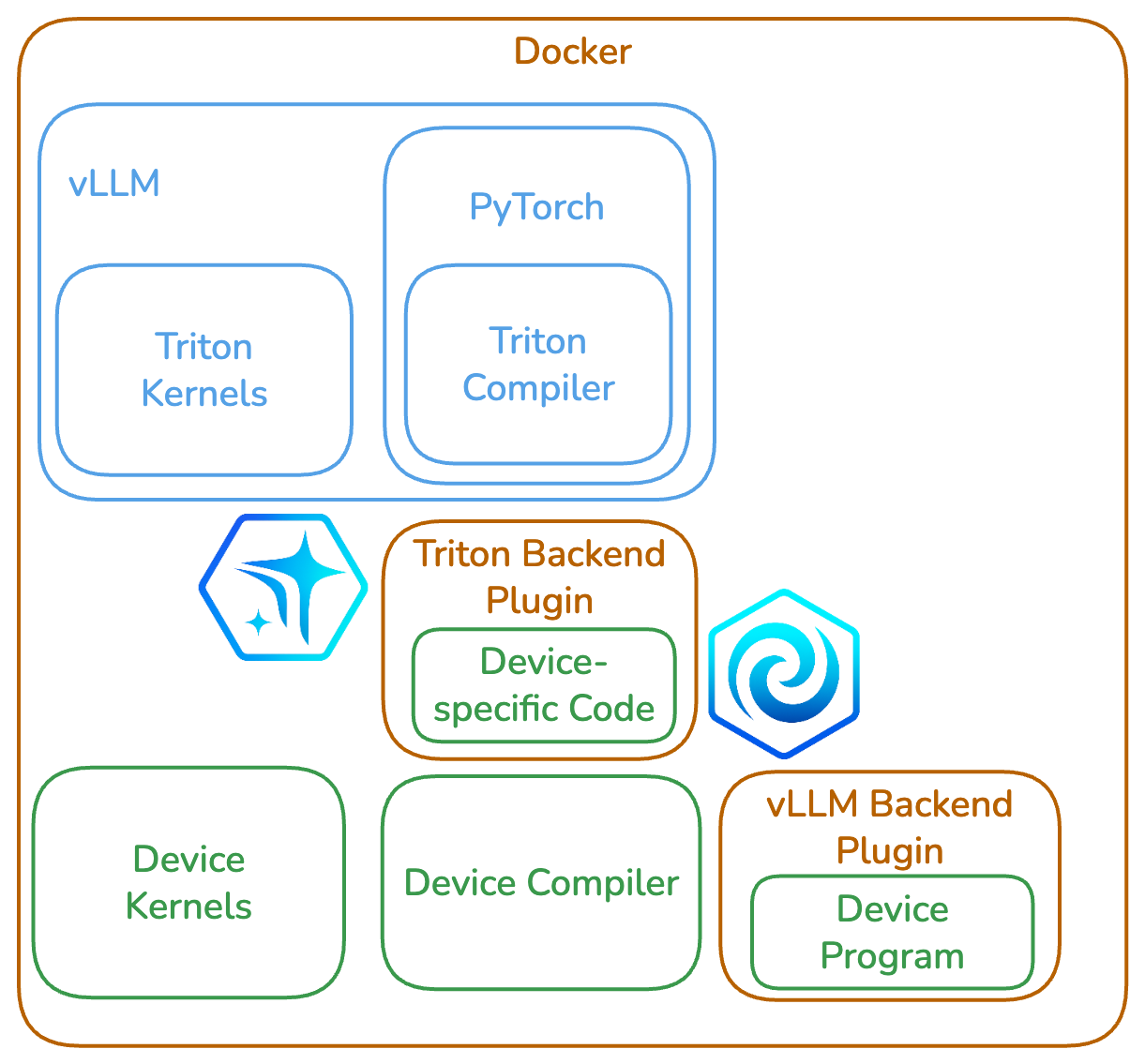

Docker Release

Docker container for each hardware endpoint

Kernelize releases a Docker container for each hardware endpoint with everything required to run inference on the target platform. The example below shows vLLM and where Nexus and Triton CPU are used in the flow.