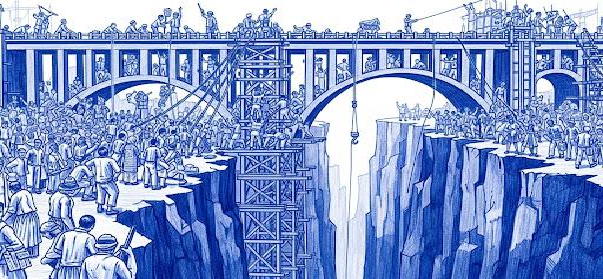

Community Beats Code: How to Bridge the NPU Software Gap

The Path to Efficient AI, Part 3

Introduction

In the previous two posts, we discussed the rise of NPU hardware and then how GPU to NPU hardware migration requires software compatibility at three points: model language, abstract kernels and runtime API. The communities supporting model languages are essential, but are far up the software stack from an NPU. For this post we will focus on how NPU software developers must engage with the Triton community to drive compatibility around kernels and runtimes.

Forming open-source initiatives or using open-source software is not enough to drive migration. AI software is complex, messy and ever changing. NPU companies must be supported by the same open-source communities, like the Triton community, who are already driving GPU software advances. To help give context, we will cover our own experiences with great projects that did not keep up with AI advances. Then we will highlight how the Triton community is the bridge for the NPU software gap.

Our Experience with Great AI Projects that were Left Behind

The past ten years is littered with great AI software projects that were left behind. Some projects were built without considering how their environment was changing. Others focused too narrowly on efficiency of a few devices over compatibility. We will focus only on the projects that we worked on.

Simon started compiler development for an early NPU back in 2015 at Knupath. Many of the NPU hardware concepts grew out of DSP processors, but data movement patterns and the ratio of compute to data transfers is different for AI. An entirely new programming model was designed to describe and optimize AI workloads. Many of the concepts in the programming model are common today, but lack of compatibility made it too difficult to migrate from DSPs or GPUs and Knupath eventually closed.

At about the same time, Bryan was leading the Catapult team at what is now at Siemens EDA. His team supported AI hardware development with several companies, including Nvidia Research. Many companies at the time developed prototype hardware for AI, and, even 10 years ago, the basic form of NPUs was the same. Most early NPUs never left the factory because the software teams couldn’t “lighting up” the hardware.

At Samsung, Simon worked on OpenCL and NNAPI to provide a standard layer for communicating with CPUs and GPUs. These two standards are a good example of not engaging the open-source community. The standards were too slow moving and the open-source community eventually gave up and moved on to other projects. And then at AMD Simon worked on rocMLIR, which once again didn’t give enough kernel compatibility with Nvidia and was left behind.

The Triton Community as a Common Ground for NPU and GPU Compilers

Both Simon and Bryan joined AMD near the beginning of 2021. Bryan started a compiler team targeting the NPU that is now found in AMD’s Strix and Telluride devices. He built a common framework to allow multiple NPU compiler teams to work together. The scope was only within one company, but the same rules apply across compiler teams. The best way to bring teams together is common model languages, abstract kernels and runtime APIs. Bryan helped bring technology together at these three points without giving up key capabilities for specialized compilers.

Simon moved to Triton after a short time on rocMLIR. One of the key differences about Triton is that it didn’t start in a hardware company. Instead of a hardware company building a proprietary solution and then trying to generalize it, Triton takes what is already available and builds on top of it. The architecture includes tile-based programming and autotuning. The optimization engine divides operations into tiles and runs experiments on the hardware instead of directly guiding the optimizations with heuristics.

The open-source and research communities love the Triton approach. Triton has 16k stars and hundreds of contributors on Github. The Triton community drives the GPU AI innovation. There are dozens of experienced researchers, experts on model training, like Unsloth, LLM model serving, like vLLM, and learning resources like LeetGPU. NPU companies need to tap into these resources to make sure they do not get left behind.

Triton is designed to run on top of low-level compilers and runtimes. Compiler teams only need to provide hooks for Triton, they do not need to disclose how their compiler works. Even without access to the compiler, a Triton developer can identify an issue and then build and test a fix. Some issues can be merged back into proprietary compilers, and others, like paged attention, are better supported outside the core compiler in the kernel.

The Path Ahead

The path to efficient AI depends on two things: the cost of switching to the next generation of NPUs must be lower and a vibrant open-source community needs to constantly work to keep the cost of switching low. There are many open-source communities involved in AI that are focused on abstract parts of the tool chain. For example, communities around PyTorch and ONNX for languages, and open-source foundation models like Llama. As you move down the software stack the Triton community is the only significant open-source community focused on abstract kernels and runtimes.

To the NPU compiler teams of the world, do not fall into the traps of over-specializing or solving yesterday’s problems. We have already seen too many good projects get left behind. The Triton community already sits at the cutting edge of AI and community experts want to help you.

If you want to know more about the Triton community, you can contact us at info@kernelize.ai.

— Simon & Bryan